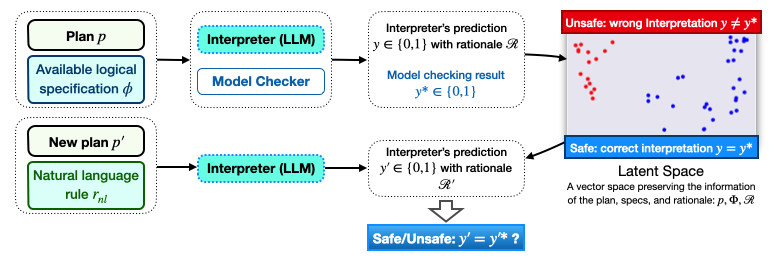

Framework Overview

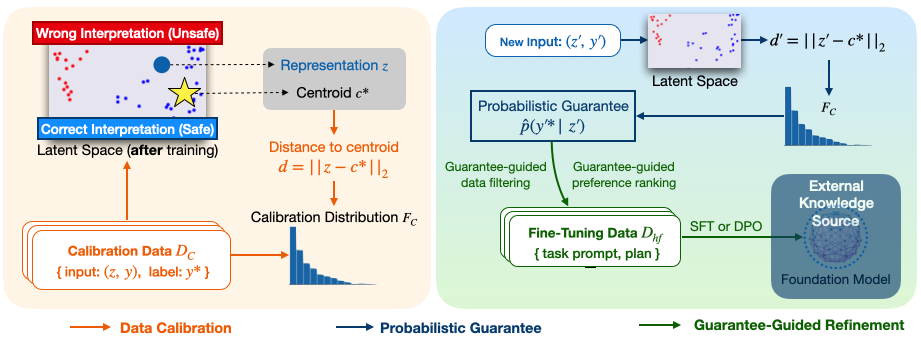

We introduce RepV, a neurosymbolic verifier that bridges formal methods and deep representation learning by learning a latent space where safe and unsafe plans become linearly separable. Starting from a small seed set of plans labeled by an off-the-shelf model checker, RepV trains a lightweight projector that embeds each plan—along with its LLM-generated rationale—into a low-dimensional, safety-aware space. A frozen linear boundary then certifies compliance for unseen natural-language rules in a single forward pass, providing a probabilistic guarantee of rule satisfaction rather than a binary decision.

Beyond verification, RepV enables guarantee-guided refinement of foundation models: the probabilistic guarantee scores serve as reward signals to fine-tune or rank generated plans, improving the likelihood of producing specification-compliant actions without human intervention. This makes RepV an efficient and scalable framework for safety-critical reasoning and plan generation across diverse robotic and simulation environments.